A Quick Note for Perf CVE-2024-46713

CVE-2024-46713 is a race condition vulnerability found in the Linux perf subsystem. Binary Gecko has published a post to introduce this issue. This post is my quick notes on analyzing the vulnerability and also documents the mechanisms of the perf subsystem.

1. Perf Ring Buffer

Perf provides users with different types of PMUs (Performance Measurement Units). During initialization, it iterates through each PMU and attempts to initialize the new event.

static LIST_HEAD(pmus);

static struct pmu *perf_init_event(struct perf_event *event)

{

// [...]

list_for_each_entry_rcu(pmu, &pmus, entry, lockdep_is_held(&pmus_srcu)) {

ret = perf_try_init_event(pmu, event);

}

}

Subsystems or drivers can register a PMU handler using the perf_pmu_register() function.

static inline void perf_tp_register(void)

{

perf_pmu_register(&perf_tracepoint, "tracepoint", PERF_TYPE_TRACEPOINT);

// [...]

}

Each perf event object can have a ring buffe (rb), represented by struct perf_buffer, which can be created by invoking mmap() [2].

static const struct file_operations perf_fops = {

// [...]

.mmap = perf_mmap,

};

static int perf_mmap(struct file *file, struct vm_area_struct *vma)

{

struct perf_buffer *rb = NULL;

// [...]

if (!rb) {

rb = rb_alloc(nr_pages, // [2]

event->attr.watermark ? event->attr.wakeup_watermark : 0,

event->cpu, flags);

ring_buffer_attach(event, rb);

}

// [...]

}

Perf also supports multiple perf events sharing the same ring buffer. An event without its own ring buffer can call ioctl(PERF_EVENT_IOC_SET_OUTPUT) to attach to the ring buffer of another event. The fd of the other event must be provided as a parameter. If the parameter is set to -1, it indicates detaching from the previously attached ring buffer.

static long _perf_ioctl(struct perf_event *event, unsigned int cmd, unsigned long arg)

{

// [...]

switch (cmd) {

case PERF_EVENT_IOC_SET_OUTPUT:

{

int ret;

if (arg != -1) {

struct perf_event *output_event;

struct fd output;

ret = perf_fget_light(arg, &output);

output_event = output.file->private_data;

ret = perf_event_set_output(event, output_event);

fdput(output);

} else {

ret = perf_event_set_output(event, NULL);

}

return ret;

}

}

// [...]

}

static int

perf_event_set_output(struct perf_event *event, struct perf_event *output_event)

{

struct perf_buffer *rb = NULL;

if (output_event) {

rb = ring_buffer_get(output_event);

// [...]

}

ring_buffer_attach(event, rb);

// [...]

}

static void ring_buffer_attach(struct perf_event *event,

struct perf_buffer *rb)

{

// [...]

if (event->rb) {

old_rb = event->rb;

}

// [...]

rcu_assign_pointer(event->rb, rb);

// [...]

if (old_rb) {

ring_buffer_put(old_rb);

}

}

During mmap, although rb_alloc() allocates and initializes the new ring buffer, it does not immediately populate the vma.

struct perf_buffer *rb_alloc(int nr_pages, long watermark, int cpu, int flags)

{

struct perf_buffer *rb;

unsigned long size;

int i, node;

size = sizeof(struct perf_buffer);

size += nr_pages * sizeof(void *);

rb = kzalloc_node(size, GFP_KERNEL, node);

rb->user_page = perf_mmap_alloc_page(cpu);

for (i = 0; i < nr_pages; i++) {

rb->data_pages[i] = perf_mmap_alloc_page(cpu);

}

rb->nr_pages = nr_pages;

ring_buffer_init(rb, watermark, flags);

return rb;

}

The kernel populates the mapped page only when a page fault occurs, through the perf_mmap_fault() function. Only the first page, rb->user_page, can be writable [3], while all other pages, such as rb->data_pages[] and rb->aux_pages[], are read-only.

static vm_fault_t perf_mmap_fault(struct vm_fault *vmf)

{

struct perf_event *event = vmf->vma->vm_file->private_data;

struct perf_buffer *rb;

vm_fault_t ret = VM_FAULT_SIGBUS;

// [...]

rcu_read_lock();

rb = rcu_dereference(event->rb);

if (vmf->pgoff && (vmf->flags & FAULT_FLAG_WRITE)) // [3]

goto unlock;

vmf->page = perf_mmap_to_page(rb, vmf->pgoff);

get_page(vmf->page);

// [...]

rcu_read_unlock();

return ret;

}

The writable page rb->user_page is a struct perf_event_mmap_page object, which stores various metadata related to the event object’s pages. Additionally, users can modify this page to configure the AUX region.

// include/uapi/linux/perf_event.h

struct perf_event_mmap_page {

// [...]

__u64 aux_head;

__u64 aux_tail;

__u64 aux_offset;

__u64 aux_size;

};

2. AUX Region

If the mmap handler perf_mmap() detects that the mmap offset does not start from the first page [1], it will try to map the AUX region. The aux_offset and aux_size of the AUX region are retrieved from the rb->user_page. This function ensures that aux_offset does not overlap with the rb->user_page or rb->data_pages[] [2], and both the aux_offset [3] and aux_size [4] must match the parameters provided by the mmap syscall. Once all checks are passed, it finally call the rb_alloc_aux() function to allocate the AUX region [5].

static int perf_mmap(struct file *file, struct vm_area_struct *vma)

{

// [...]

if (vma->vm_pgoff == 0) { // [1]

// [...]

} else {

nr_pages = vma_size / PAGE_SIZE;

rb = event->rb;

aux_offset = READ_ONCE(rb->user_page->aux_offset);

aux_size = READ_ONCE(rb->user_page->aux_size);

if (aux_offset < perf_data_size(rb) + PAGE_SIZE) // [2]

goto aux_unlock;

if (aux_offset != vma->vm_pgoff << PAGE_SHIFT) // [3]

goto aux_unlock;

if (aux_size != vma_size || aux_size != nr_pages * PAGE_SIZE) // [4]

goto aux_unlock;

}

// [...]

else {

ret = rb_alloc_aux(rb, event, vma->vm_pgoff, nr_pages, // [5]

event->attr.aux_watermark, flags);

}

// [...]

}

Not every PMU supports an AUX region. Therefore, rb_alloc_aux() first checks whether the PMU associated with the event has defined a setup_aux handler [6]. If setup_aux is defined, the kernel proceeds to allocate AUX pages [7] and initialize the AUX region [8].

int rb_alloc_aux(struct perf_buffer *rb, struct perf_event *event,

pgoff_t pgoff, int nr_pages, long watermark, int flags)

{

if (!has_aux(event)) // [6]

return -EOPNOTSUPP;

// [...]

rb->aux_pages = kcalloc_node(nr_pages, sizeof(void *), GFP_KERNEL,

node);

rb->free_aux = event->pmu->free_aux;

for (rb->aux_nr_pages = 0; rb->aux_nr_pages < nr_pages;) {

struct page *page;

int last, order;

order = min(max_order, ilog2(nr_pages - rb->aux_nr_pages));

page = rb_alloc_aux_page(node, order); // [7]

// [...]

}

rb->aux_priv = event->pmu->setup_aux(event, rb->aux_pages, nr_pages, // [8]

overwrite);

// [...]

}

static inline bool has_aux(struct perf_event *event)

{

return event->pmu->setup_aux;

}

Unmapping is handled by the perf_mmap_close() function. This function first checks whether the memory to be unmapped is part of the AUX region. If it is, it updates the refcnt and releases the AUX region while holding the rb->aux_mutex lock to ensure race condition safety.

static void perf_mmap_close(struct vm_area_struct *vma)

{

// [...]

if (rb_has_aux(rb) && vma->vm_pgoff == rb->aux_pgoff &&

atomic_dec_and_mutex_lock(&rb->aux_mmap_count, &rb->aux_mutex)) {

rb_free_aux(rb);

mutex_unlock(&rb->aux_mutex);

}

// [...]

}

void rb_free_aux(struct perf_buffer *rb)

{

if (refcount_dec_and_test(&rb->aux_refcount))

__rb_free_aux(rb);

}

static void __rb_free_aux(struct perf_buffer *rb)

{

int pg;

// [...]

if (rb->aux_nr_pages) {

for (pg = 0; pg < rb->aux_nr_pages; pg++)

rb_free_aux_page(rb, pg);

kfree(rb->aux_pages);

rb->aux_nr_pages = 0;

}

}

3. Root Cause & Exploitation

However, before the patch, the lock used was event->mmap_mutex rather than the mutex lock of the ring buffer. Since two different events can share the same ring buffer through ioctl(PERF_EVENT_IOC_SET_OUTPUT), a race condition could occur between perf_mmap() and perf_mmap_close() when operating on the ring buffer.

@@ -6373,12 +6374,11 @@ static void perf_mmap_close(struct vm_area_struct *vma)

event->pmu->event_unmapped(event, vma->vm_mm);

/*

- * rb->aux_mmap_count will always drop before rb->mmap_count and

- * event->mmap_count, so it is ok to use event->mmap_mutex to

- * serialize with perf_mmap here.

+ * The AUX buffer is strictly a sub-buffer, serialize using aux_mutex

+ * to avoid complications.

*/

if (rb_has_aux(rb) && vma->vm_pgoff == rb->aux_pgoff &&

- atomic_dec_and_mutex_lock(&rb->aux_mmap_count, &event->mmap_mutex)) {

+ atomic_dec_and_mutex_lock(&rb->aux_mmap_count, &rb->aux_mutex)) {

@@ -6531,6 +6532,9 @@ static int perf_mmap(struct file *file, struct vm_area_struct *vma)

if (!rb)

goto aux_unlock;

+ aux_mutex = &rb->aux_mutex;

+ mutex_lock(aux_mutex);

+

aux_offset = READ_ONCE(rb->user_page->aux_offset);

aux_size = READ_ONCE(rb->user_page->aux_size);

The perf_mmap() function allows a process to re-attach to the AUX region if it exists. This function increments rb->aux_mmap_count and then exits immediately.

static inline bool rb_has_aux(struct perf_buffer *rb)

{

return !!rb->aux_nr_pages;

}

static int perf_mmap(struct file *file, struct vm_area_struct *vma)

{

// [...]

else {

// [...]

if (rb_has_aux(rb)) {

atomic_inc(&rb->aux_mmap_count);

ret = 0;

goto unlock;

}

}

// [...]

}

The next time the mapped memory is accessed and a page fault is triggered, the page fault handler perf_mmap_fault() will map the AUX pages into the process’s virtual memory.

static vm_fault_t perf_mmap_fault(struct vm_fault *vmf)

{

vmf->page = perf_mmap_to_page(rb, vmf->pgoff);

// [...]

}

struct page *

perf_mmap_to_page(struct perf_buffer *rb, unsigned long pgoff)

{

if (rb->aux_nr_pages) {

if (pgoff >= rb->aux_pgoff) {

int aux_pgoff = array_index_nospec(pgoff - rb->aux_pgoff, rb->aux_nr_pages);

return virt_to_page(rb->aux_pages[aux_pgoff]);

}

}

// [...]

}

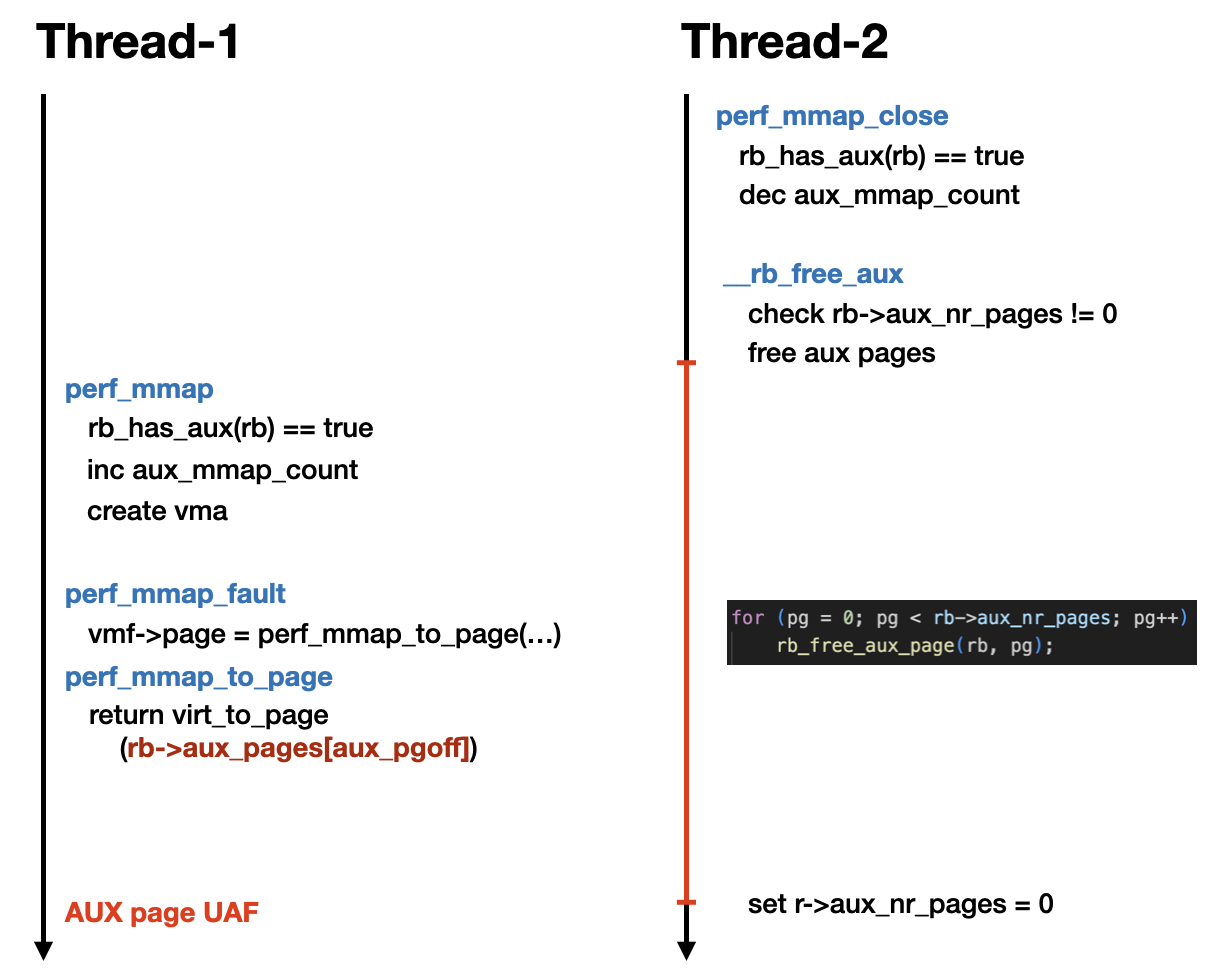

One of the scenarios that leads to a race condition is as follows:

Once the race condition succeeds, we can access the freed AUX pages. However, due to the checks in perf_mmap_fault(), the AUX region will only have RO permissions. At this point, we can spray a large number of writable rb->user_page to reclaim the AUX pages.

Subsequently, by using madvise(MADV_DONTNEED) to unmap the overlapping rb->user_page without releasing the vma, we can pivot the vulnerability into a writable page UAF primitive. With this primitive, the rest of the exploit becomes straightforward.

4. Others

In the kernelCTF environment, several PMUs are enabled:

perf_breakpointperf_uprobepmu_msrperf_tracepointperf_kprobeperf_sweventperf_task_clockperf_cpu_clock

However, none of these PMUs define setup_aux, which means this vulnerability cannot be exploited in the kernelCTF environment 🙁.

Below is code snippet to create an AUX region and attach the ring buffer to another event.

int main()

{

memset(&attr, 0, sizeof(attr));

attr.type = PERF_TYPE_SOFTWARE;

attr.size = sizeof(attr);

attr.disabled = 1;

attr.exclude_kernel = 1;

attr.read_format = PERF_FORMAT_GROUP;

fd1 = perf_event_open(&attr, 0, -1, -1, 0);

fd2 = perf_event_open(&attr, 0, -1, -1, 0);

struct perf_event_mmap_page *user_page = mmap(NULL, 0x1000, PROT_READ | PROT_WRITE, MAP_SHARED, fd1, 0); // 0x1000 perf_event_mmap_page

if (user_page == MAP_FAILED) perror_exit("[-] mmap event");

// setup AUX region

user_page->aux_offset = 0x10000;

user_page->aux_size = 0x1000;

ptr = mmap(NULL, 0x1000, PROT_READ | PROT_WRITE, MAP_SHARED, fd1, 0x10000);

if (ptr == MAP_FAILED) perror_exit("[-] mmap event");

// fd2 attach fd1's ring buffer

ioctl(fd2, PERF_EVENT_IOC_SET_OUTPUT, fd1);

return 0;

}