Some Casual Notes for CVE-2024-26921

CVE-2024-26921 is a vulnerability in the inet subsystem, which has been demonstrated to be exploitable in kernelCTF. The fix commit can be found here, and syzbot has also provided a KASAN report about this bug. In this post, I will provide a overview of this vulnerability.

1. Sock

1.1. Allocation

When a socket is created using sys_socket, the kernel allocates a struct sock object and sets write memory refcount to 1 [1]. The source (slab or specific slab_cache) of this object is determined by the protocol (struct proto) object [2].

struct sock *sk_alloc(struct net *net, int family, gfp_t priority,

struct proto *prot, int kern)

{

struct sock *sk;

sk = sk_prot_alloc(prot, priority | __GFP_ZERO, family);

// [...]

refcount_set(&sk->sk_wmem_alloc, 1); // [1]

// [...]

}

static struct sock *sk_prot_alloc(struct proto *prot, gfp_t priority,

int family)

{

struct sock *sk;

struct kmem_cache *slab;

// [...]

slab = prot->slab; // [2]

if (slab != NULL) {

sk = kmem_cache_alloc(slab, priority & ~__GFP_ZERO);

// [...]

} else

sk = kmalloc(prot->obj_size, priority);

// [...]

}

1.2. Update

When sending a packet, __ip_append_data() first binds the skb object to sk [1]. It then enqueues the skb [2] and updates the write memory refcount [3].

static int __ip_append_data(struct sock *sk,

/* ... */)

{

// [...]

if (!skb->destructor) {

skb->destructor = sock_wfree;

skb->sk = sk; // [1]

wmem_alloc_delta += skb->truesize;

}

__skb_queue_tail(queue, skb); // [2]

// [...]

if (wmem_alloc_delta)

refcount_add(wmem_alloc_delta, &sk->sk_wmem_alloc); // [3]

// [...]

}

1.3. Free

When an skb leaves its owner sk, the destructor sock_wfree() is called.

static inline void skb_orphan(struct sk_buff *skb)

{

if (skb->destructor) {

skb->destructor(skb); // <----------

skb->destructor = NULL;

skb->sk = NULL;

}

// [...]

}

The destructor function sock_wfree() updates the write memory refcount and releases the sk object if the refcount drops to zero [1].

void sock_wfree(struct sk_buff *skb)

{

unsigned int len = skb->truesize;

// [...]

if (refcount_sub_and_test(len, &sk->sk_wmem_alloc))

__sk_free(sk); // [1]

}

static void __sk_free(struct sock *sk)

{

// [...]

else

sk_destruct(sk); // <----------

}

void sk_destruct(struct sock *sk)

{

// [...]

else

__sk_destruct(&sk->sk_rcu); // <----------

}

static void __sk_destruct(struct rcu_head *head)

{

// [...]

sk_prot_free(sk->sk_prot_creator, sk); // <----------

}

static void sk_prot_free(struct proto *prot, struct sock *sk)

{

struct kmem_cache *slab;

slab = prot->slab;

// [...]

if (slab != NULL)

kmem_cache_free(slab, sk);

else

kfree(sk);

}

An sk object reduces its original refcount when being releasing through syscall, such as sys_exit or sys_close. The refcount sk->sk_refcnt can be somewhat confusing; it appears to act as a higher-level refcount to track the kernel’s direct usage of the sk object.

int inet_release(struct socket *sock)

{

struct sock *sk = sock->sk;

if (sk) {

// [...]

sk->sk_prot->close(sk, timeout); // <----------

sock->sk = NULL;

}

return 0;

}

void tcp_close(struct sock *sk, long timeout)

{

// [...]

sock_put(sk); // <----------

}

static inline void sock_put(struct sock *sk)

{

if (refcount_dec_and_test(&sk->sk_refcnt))

sk_free(sk); // <----------

}

void sk_free(struct sock *sk)

{

if (refcount_dec_and_test(&sk->sk_wmem_alloc)) // <----------

__sk_free(sk);

}

2. Network Device

2.1. Create Link

The rtnl_newlink_create() function is used to link two network interfaces. For example, a process can send AF_NETLINK requests to create a new ipvlan link.

static int rtnl_newlink_create(struct sk_buff *skb, struct ifinfomsg *ifm,

const struct rtnl_link_ops *ops,

const struct nlmsghdr *nlh,

struct nlattr **tb, struct nlattr **data,

struct netlink_ext_ack *extack)

{

// [...]

if (ops->newlink)

err = ops->newlink(link_net ? : net, dev, tb, data, extack);

else

err = register_netdevice(dev); // <----------

// [...]

}

int register_netdevice(struct net_device *dev)

{

// [...]

if (dev->netdev_ops->ndo_init) {

ret = dev->netdev_ops->ndo_init(dev); // <----------

// [...]

}

// [...]

}

static const struct net_device_ops ipvlan_netdev_ops = {

.ndo_init = ipvlan_init, // <----------

.ndo_open = ipvlan_open,

.ndo_start_xmit = ipvlan_start_xmit,

// [...]

};

2.2. Activate

The device is down by default. To activate it, run the ip set dev up command, and the dev_open() function will be invoked to configure it.

int dev_open(struct net_device *dev, struct netlink_ext_ack *extack)

{

int ret;

if (dev->flags & IFF_UP) // already activated

return 0;

ret = __dev_open(dev, extack); // <----------

if (ret < 0)

return ret;

// [...]

}

static int __dev_open(struct net_device *dev, struct netlink_ext_ack *extack)

{

// [...]

if (!ret && ops->ndo_open)

ret = ops->ndo_open(dev); // <----------

// [...]

}

2.3. Transmit

Worker kthreads periodically call dev_hard_start_xmit() to send queued packets.

struct sk_buff *dev_hard_start_xmit(struct sk_buff *first, struct net_device *dev,

struct netdev_queue *txq, int *ret)

{

struct sk_buff *skb = first;

while (skb) {

struct sk_buff *next = skb->next;

// [...]

rc = xmit_one(skb, dev, txq, next != NULL); // <----------

// [...]

}

}

static int xmit_one(struct sk_buff *skb, struct net_device *dev,

struct netdev_queue *txq, bool more)

{

unsigned int len;

int rc;

// [...]

len = skb->len;

rc = netdev_start_xmit(skb, dev, txq, more); // <----------

}

static inline netdev_tx_t netdev_start_xmit(struct sk_buff *skb, struct net_device *dev,

struct netdev_queue *txq, bool more)

{

const struct net_device_ops *ops = dev->netdev_ops; // &ipvlan_netdev_ops

// [...]

rc = __netdev_start_xmit(ops, skb, dev, more); // <----------

}

static inline netdev_tx_t __netdev_start_xmit(const struct net_device_ops *ops,

struct sk_buff *skb, struct net_device *dev,

bool more)

{

__this_cpu_write(softnet_data.xmit.more, more);

return ops->ndo_start_xmit(skb, dev); // <----------

}

The ipvlan_start_xmit() function serves as the transmit handler for ipvlan devices.

static netdev_tx_t ipvlan_start_xmit(struct sk_buff *skb,

struct net_device *dev)

{

ret = ipvlan_queue_xmit(skb, dev); // <----------

// [...]

}

int ipvlan_queue_xmit(struct sk_buff *skb, struct net_device *dev)

{

// [...]

switch(port->mode) {

// [...]

case IPVLAN_MODE_L3S:

return ipvlan_xmit_mode_l3(skb, dev); // <----------

}

// [...]

}

static int ipvlan_xmit_mode_l3(struct sk_buff *skb, struct net_device *dev)

{

// [...]

return ipvlan_process_outbound(skb); // <----------

}

static int ipvlan_process_outbound(struct sk_buff *skb)

{

// [...]

else if (skb->protocol == htons(ETH_P_IP))

ret = ipvlan_process_v4_outbound(skb); // <----------

// [...]

}

static noinline_for_stack int ipvlan_process_v4_outbound(struct sk_buff *skb)

{

err = ip_local_out(net, skb->sk, skb);

// [...]

return ret;

}

Finally, the ip_local_out() function is called to transmit locally generated packets to the network (from local to network).

int ip_local_out(struct net *net, struct sock *sk, struct sk_buff *skb)

{

int err;

err = __ip_local_out(net, sk, skb);

if (likely(err == 1))

err = dst_output(net, sk, skb);

return err;

}

3. Root Cause

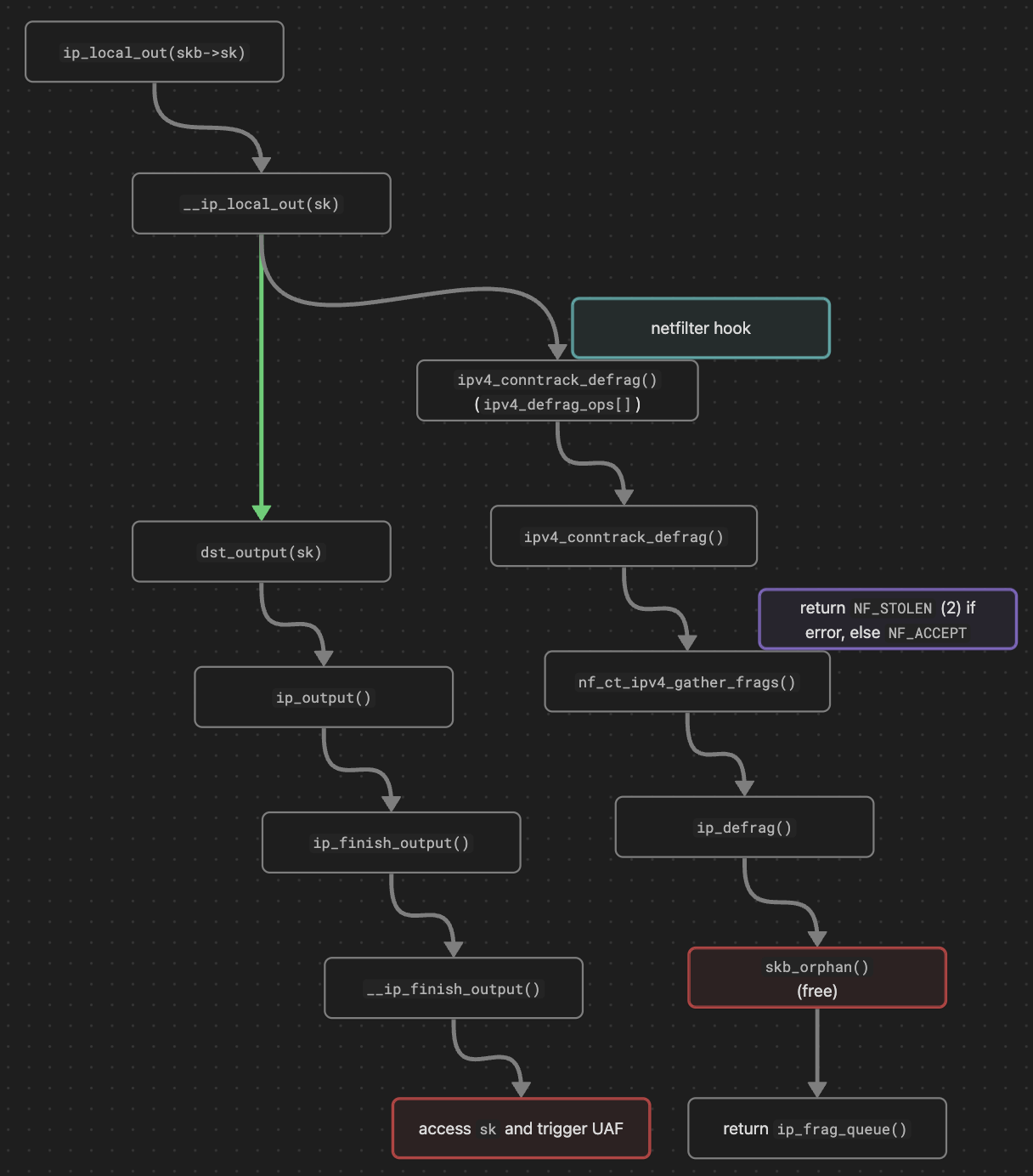

A defrag netfiler hook [1] can be triggered in __ip_local_out(), invoking ipv4_conntrack_defrag() function.

int __ip_local_out(struct net *net, struct sock *sk, struct sk_buff *skb)

{

// [...]

skb->protocol = htons(ETH_P_IP);

return nf_hook(NFPROTO_IPV4, NF_INET_LOCAL_OUT, // [1]

net, sk, skb, NULL, skb_dst(skb)->dev,

dst_output);

}

static const struct nf_hook_ops ipv4_defrag_ops[] = {

// [...]

{

.hook = ipv4_conntrack_defrag, // <----------

.pf = NFPROTO_IPV4,

.hooknum = NF_INET_LOCAL_OUT,

.priority = NF_IP_PRI_CONNTRACK_DEFRAG,

},

};

This function calls skb_orphan() [2] within the internal function ip_defrag(), assuming it is safe to unbind the skb from the sk. If the skb holds the last reference to the sk, the sk object will be freed at this point.

static unsigned int ipv4_conntrack_defrag(void *priv,

struct sk_buff *skb,

const struct nf_hook_state *state)

{

struct sock *sk = skb->sk;

// [...]

if (ip_is_fragment(ip_hdr(skb))) {

// [...]

if (nf_ct_ipv4_gather_frags(state->net, skb, user)) // <----------

return NF_STOLEN;

}

return NF_ACCEPT;

}

static int nf_ct_ipv4_gather_frags(struct net *net, struct sk_buff *skb,

u_int32_t user)

{

local_bh_disable();

err = ip_defrag(net, skb, user); // <----------

local_bh_enable();

}

int ip_defrag(struct net *net, struct sk_buff *skb, u32 user)

{

struct net_device *dev = skb->dev ? : skb_dst(skb)->dev;

struct ipq *qp;

int ret;

skb_orphan(skb); // <---------- [2], sk is freed

qp = ip_find(net, ip_hdr(skb), user, vif);

// [...]

spin_lock(&qp->q.lock);

ret = ip_frag_queue(qp, skb);

spin_unlock(&qp->q.lock);

ipq_put(qp);

return ret;

}

However, the dst_output() function also takes the freed sk object as a parameter [3] and uses it [4], leading to an UAF.

static inline int dst_output(struct net *net, struct sock *sk /* [3] */, struct sk_buff *skb)

{

return INDIRECT_CALL_INET(skb_dst(skb)->output,

ip6_output, ip_output,

net, sk, skb);

}

int ip_output(struct net *net, struct sock *sk, struct sk_buff *skb)

{

struct net_device *dev = skb_dst(skb)->dev, *indev = skb->dev;

skb->dev = dev;

skb->protocol = htons(ETH_P_IP);

return NF_HOOK_COND(NFPROTO_IPV4, NF_INET_POST_ROUTING,

net, sk, skb, indev, dev,

ip_finish_output, // <----------

!(IPCB(skb)->flags & IPSKB_REROUTED));

}

static int ip_finish_output(struct net *net, struct sock *sk, struct sk_buff *skb)

{

int ret;

switch (ret) {

case NET_XMIT_SUCCESS:

return __ip_finish_output(net, sk, skb); // <----------

// [...]

}

}

static int __ip_finish_output(struct net *net, struct sock *sk, struct sk_buff *skb)

{

unsigned int mtu;

// [...]

mtu = ip_skb_dst_mtu(sk, skb); // <---------- [4], trigger UAF

// [...]

}

The simplified execution flow is illustrated below: